why Kubernetes is used?

This is handled in the docker container. this is a container management tool.

Why is Kubernetes also called k8s?

When you look at the spelling of Kubernetes, there are 8 alphabets between the K and the S. so it is called k8s.

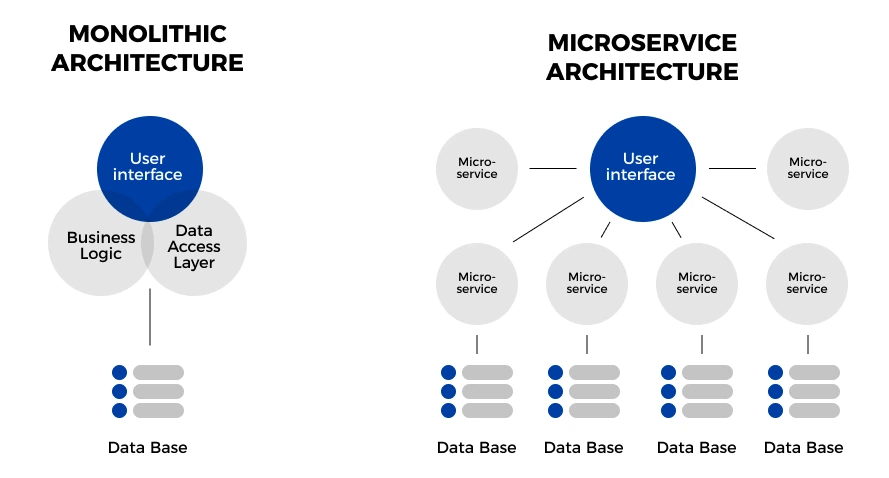

What is the difference between Monolithic architecture & Microservices?

Monolithic architecture :

A monolithic architecture is a singular, large computing network with one code base that couples all of the business concerns together.

Microservices :

Microservices are an architectural and organizational approach to software development where software is composed of small independent services that communicate over well-defined APIs. These services are owned by small, self-contained teams.

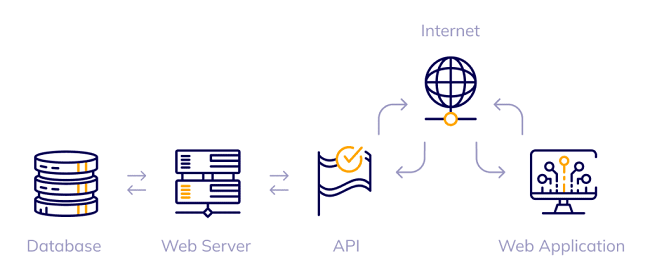

What is API?

It is a group of computer programs and operations. It is a software code that may be viewed or performed, enabling data exchange and communication between two software programs.

it is like an entry point.

What are containers in k8s?

Kubernetes, being designed and released by Google, refers to an open-source container management system. With Kubernetes, we can easily create a distributed cluster of hosted containers. Kubernetes offers robust container cluster orchestration tools, including health monitoring, deployment, failover, and auto-scaling.

Difference Between K8s AND Docker?

Docker is a containerization platform and runtime and Kubernetes is a platform for running and managing containers from many container runtimes. Kubernetes supports numerous container runtimes, including Docker.

Docker is a suite of software development tools for creating, sharing and running individual containers; Kubernetes is a system for operating containerized applications at scale.

Think of containers as standardized packaging for microservices with all the needed application code and dependencies inside. Creating these containers is the domain of Docker. A container can run anywhere, on a laptop, in the cloud, on local servers, and even on edge devices.

A modern application consists of many containers. Operating them in production is the job of Kubernetes. Since containers are easy to replicate, applications can auto-scale: expand or contract processing capacities to match user demands.

Docker and Kubernetes are mostly complementary technologies—Kubernetes and Docker. However, Docker also provides a system for operating containerized applications at scale, called Docker Swarm—

What is Kubernetes?

Kubernetes is an open-source container management tool which automates container deployment, Container scaling & load balancing.

it schedule runs and manages isolated containers which are running on virtual/physical/cloud Machines.

All top cloud provides support k8s.

k8s written in Golang

History of k8s?

Kubernetes is also known as 'k8s'. This word comes from the Greek language, which means pilot or helmsman.

Kubernetes is an extensible, portable, and open-source platform designed by Google in 2014.

It is mainly used to automate the deployment, scaling, and operations of container-based applications across the cluster of nodes.

This technique or concept works with many container tools, like docker, and follows the client-server architecture.

Online Platform fot k8s

Kubernetes playground

play with k8s

play with k8s classroom

Cloud Based K8S Services:

GKE : google k8s services

AKS: azure k8s services

Amazon EKS : (Elastic Kubernetes Services)

Kubernetes Installation Tool:

Minicube

Kubeadn

Problems with Scaling up the Container:

Containers cannot communicate with each other

Autoscaling and load balancing was not possible.

containers had to be managed carefully.

Features of Kubernetes:

Orchestration (Clustering of any number of containers running on a different network)

Autoscaling

Auto-Healing

k8s support hybrid cloud

k8s supports virtual cloud

Autoscaling (Vertical & Horizontal)

Load Balancing

Platform Independent(cloud/virtual physical)

Fault Tolerance (Node/Pod failure)

Rollback (going back to the previous version)

Health Monitoring of containers

Batch Execution (one-time, sequential, parallel)

Difference Between k8s and Docker Swarm?

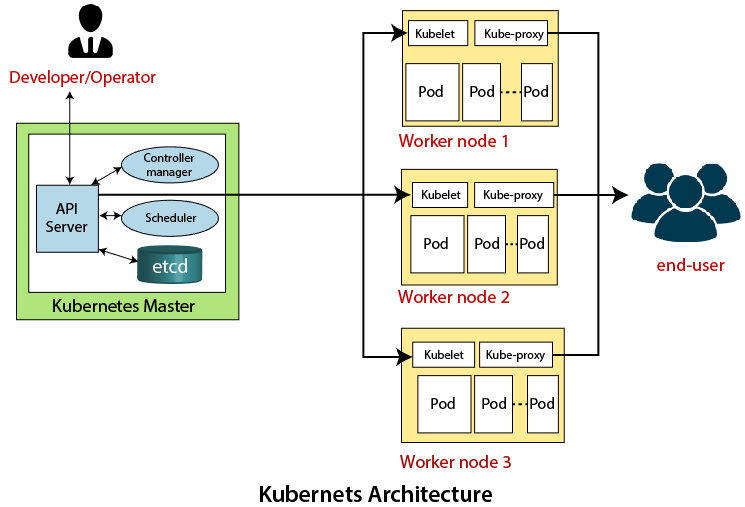

Kubernetes Architecture :

Pod: A group of one or more containers. the smallest unit of k8s.The container has no ip address Pod has an IP address.

If the pod fails, then that pod will not be created again, another new pod will be created and its IP will be different.

kubelet: Kublet is a small, lightweight Kubernetes node agent that runs on each node in a Kubernetes cluster. It's responsible for managing the nodes and communicating with the Kubernetes master. It's also responsible for making sure that the containers running on the nodes are healthy and running correctly.

Kube-proxy: Kube-proxy is a network proxy service for Kubernetes that is responsible for routing traffic to different services within the cluster. It is responsible for forwarding traffic from one service to another, allowing for communication between different components of the Kubernetes cluster.

Service:

In Kubernetes, a service is an object that abstracts the underlying infrastructure and provides a unified access point for the applications that are running on the cluster. Services allow the applications to communicate with each other and are used to provide load balancing and service discovery.

cluster: In Kubernetes, a cluster is a set of nodes (physical or virtual machines) that are connected and managed by the Kubernetes software.

Container Engine(Docker, Rocket, ContainerD): A container engine is a software system that enables applications and services to be packaged and run in an isolated environment. Docker, Rocket, and ContainerD are all examples of container engines that are used to run applications in containers.

Exposing containers on ports specified in the manifest.

manifest - A manifest is a file that contains information about an application or service, such as its dependencies and configuration. Manifests are used to deploy applications and services to a Kubernetes cluster.

API Server (Application Programeble Interface)-

The API Server is the entry point of K8S Services. The Kubernetes API server receives the REST commands which are sent by the user. After receiving them, it validates the REST requests, processes them, and then executes them. After the execution of REST commands, the resulting state of a cluster is saved in 'etcd' as a distributed key-value store. This API server is meant to scale automatically as per load.

it is the front end of the control plane.

ETCD

It is an open-source, simple, distributed key-value store that is used to store cluster data. It is part of a master node which is written in a GO programming language.

stores metadata(data of data) and stores cluster

information about the state of the cluster

Consistent and highly-available key value store used as Kubernetes' backing store for all cluster data.

Controller Manager - The Kubernetes Controller Manager (also called kube-controller-manager) is a daemon that acts as a continuous control loop in a Kubernetes cluster. The controller monitors the current state of the cluster via calls made to the API Server and changes the current state to match the desired state described in the cluster’s declarative configuration.

Kubernetes resources are defined by a manifest file written in YAML. When the manifest is deployed, an object is created that aims to reach the desired state within the cluster. From that point, the appropriate controller watches the object and updates the cluster’s existing state to match the desired state.

Scheduler - The scheduler in a master node schedules the tasks for the worker nodes. And, for every worker node, it is used to store the resource usage information.

In other words, it is a process that is responsible for assigning pods to the available worker nodes

Handles pod creation and management.

If you have written that I have to make pode 3 in a container then Sachduler Pod 3 in the container will be made

If you have not written in which pod to create the container, then the scheduler itself will also do the work of creating the container.

Control Panel/plane: Kubernetes Control Panel is a web-based user interface.

The control plane manages the worker nodes and the Pods in the cluster.

What is kubectl stand for?

Kubectl stands for “Kubernetes Command-line interface”. It is a command-line tool for the Kubernetes platform to perform API calls. Kubectl is the main interface that allows users to create (and manage) individual objects or groups of objects inside a Kubernetes cluster.

Role of the master node

K8s cluster contains containers running or bare metal/VM instances/cloud instances/cloud instances /all mix.

K8s designed one or more of these as masters and all are workers.

The master is now going to run a set of K8s process these process will ensure the smooth functioning of the cluster these processes are called the "control plane"

K8s process - API Server, Controller manager, Scheduler

Can be multi-master for High Availability.

Master runs Control Plane to run cluster smoothly.

What is minikube?

Ans:- Minikube is a tool which quickly sets up a local Kubernetes cluster on macOS, Linux, and Windows. It can deploy as a VM, a container, or on bare-metal.

Minikube is a pared-down version of Kubernetes that gives you all the benefits of Kubernetes with a lot less effort.

- Features of minikube

Ans:-

(a) Supports the latest Kubernetes release (+6 previous minor versions)

(b) Cross-platform (Linux, macOS, Windows)

(c) Deploy as a VM, a container, or on bare-metal

(d) Multiple container runtimes (CRI-O, containers, docker)

(e) Direct API endpoint for blazing-fast image load and build

(f) Advanced features such as LoadBalancer, filesystem mounts, FeatureGates, and network policy

(g) Addons for easily installed Kubernetes applications

(h) Supports common CI environments

Why do we use K8S?

Docker fails in production and Docker gets killed or destroyed at any time. So to avoid all these things and to manage and control the container we will use K8S.

What is pod vs container vs node?

Pods are simply the smallest unit of execution in Kubernetes, consisting of one or more containers, each with one or more applications and their binaries. Nodes are the physical servers or VMs that comprise a Kubernetes Cluster.

How will you ensure that a microservice architecture is there in your app?

To ensure a microservice architecture is in place in an application, it is necessary to break down the application into smaller, independently deployable services. These services should be independently managed and communicated with each other through a well-defined API. Additionally, each service should be deployed on a separate server to ensure scalability and fault tolerance.

For example - YouTube

YouTube has a separate container for each service,e.g. separate microservices for shorts separate microservices for youtube streaming separate microservices for videos and uploads separate microservices for stories It's not all running together, it's all running microservices. And all these things have become containers and K8S manages these containers. So where the work is done in small chunks, we will make sure that there are microservices.

Which is the service that allows you to connect to other worker nodes?

The service that allows you to connect to other worker nodes is called a Kubernetes cluster(API). A Kubernetes cluster is made up of a master node and one or more worker nodes, which communicate with each other to manage the application. The master node is responsible for scheduling and orchestration of the application, while the worker nodes are responsible for running the actual application code.

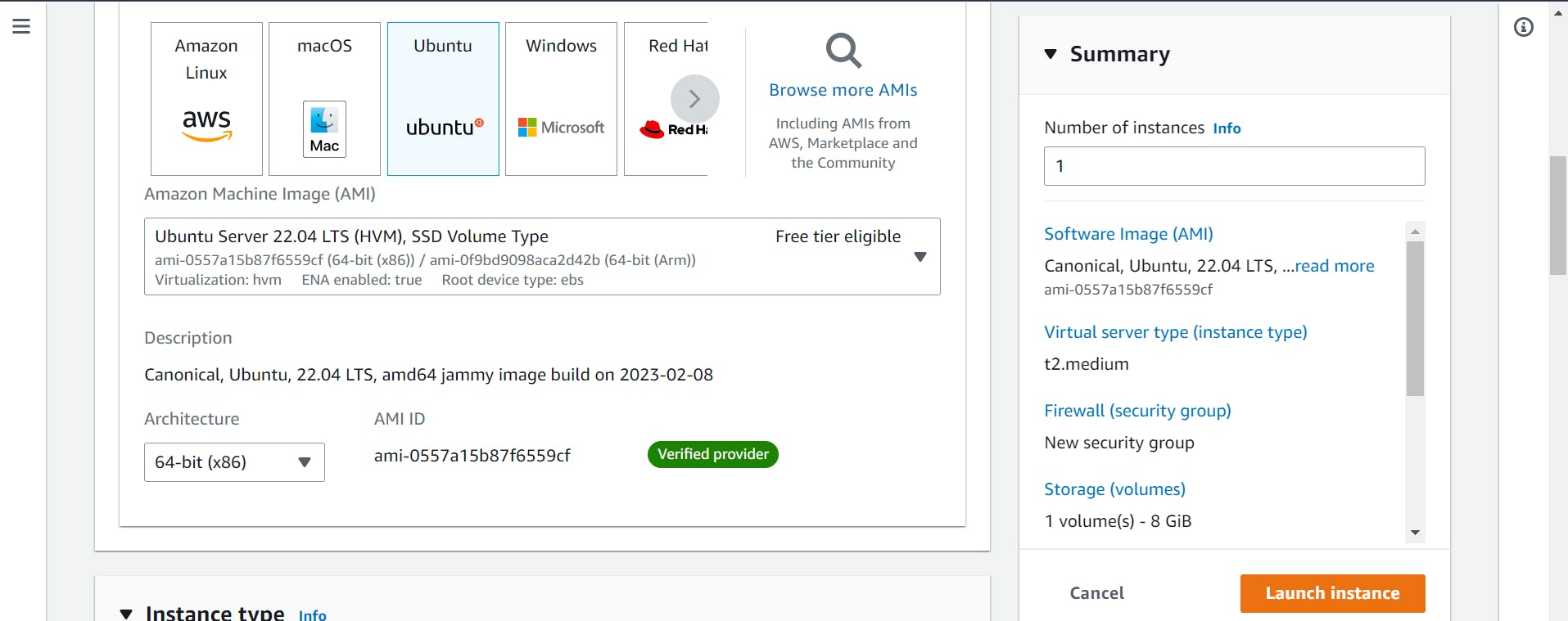

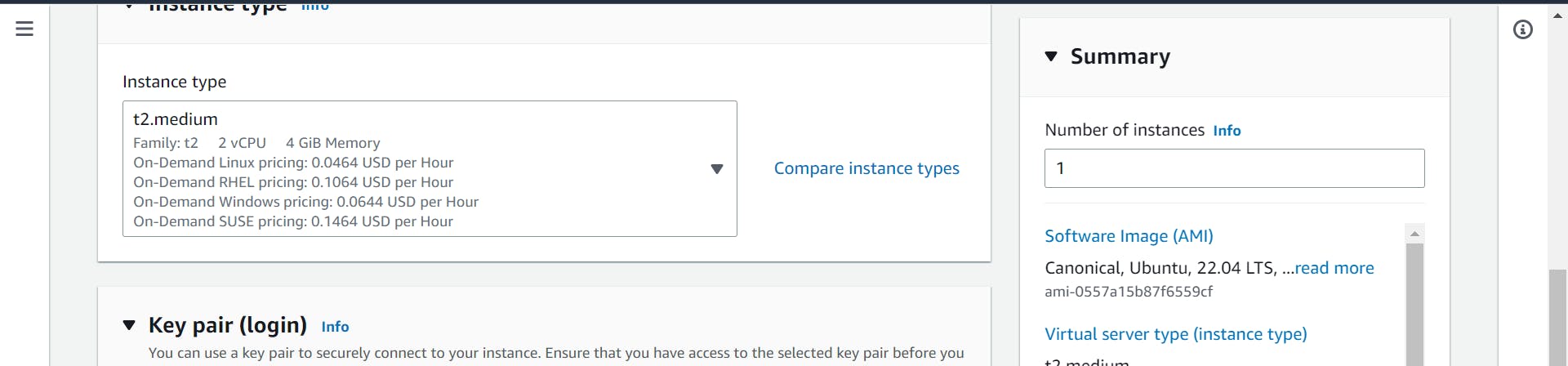

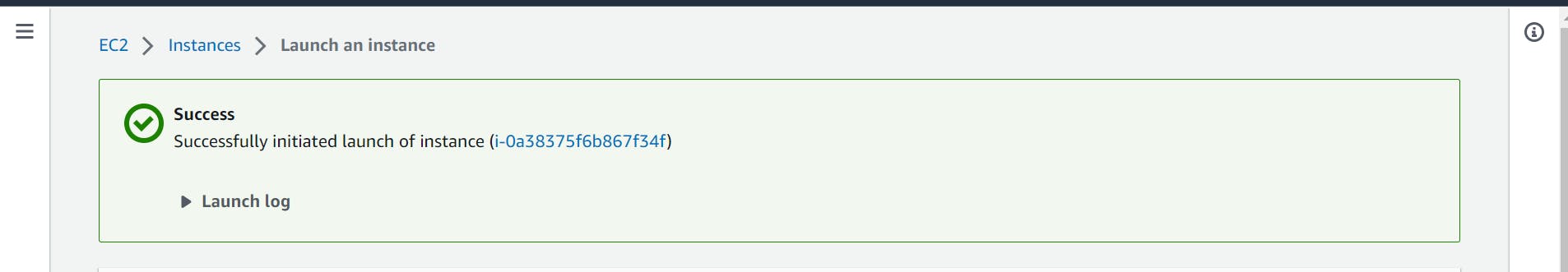

Hands-On

make sure you choose t2.medium for master nodes

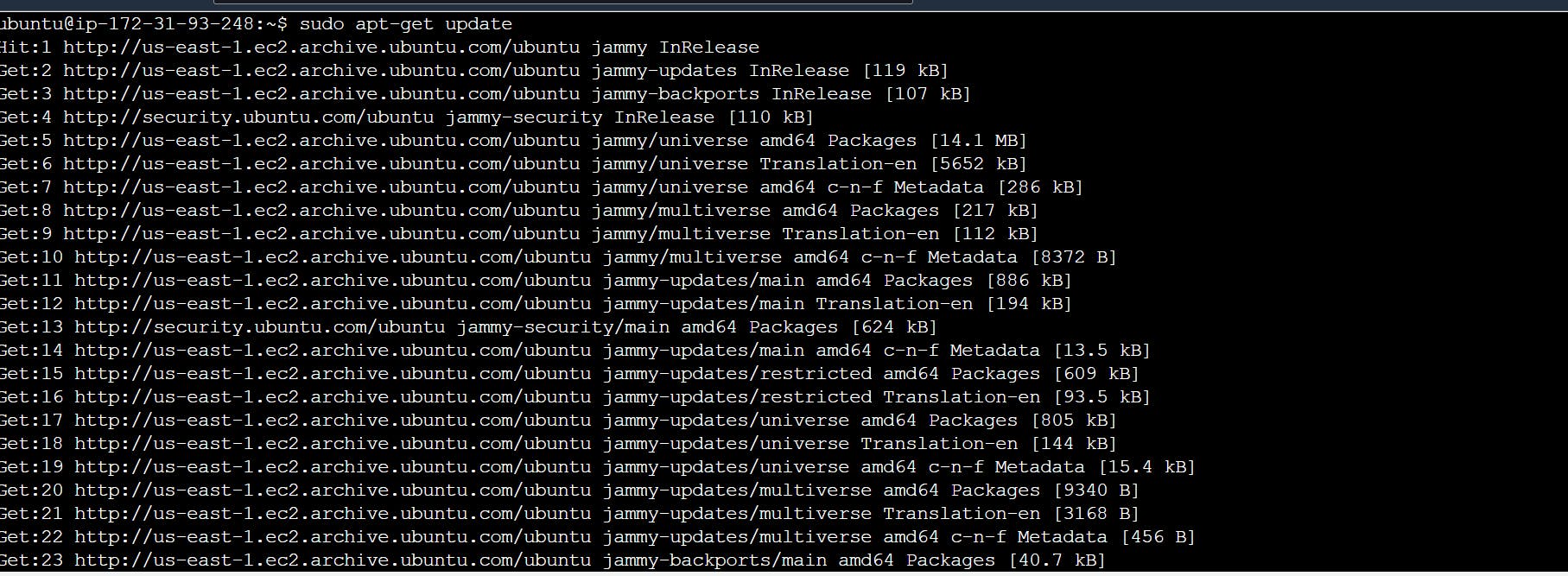

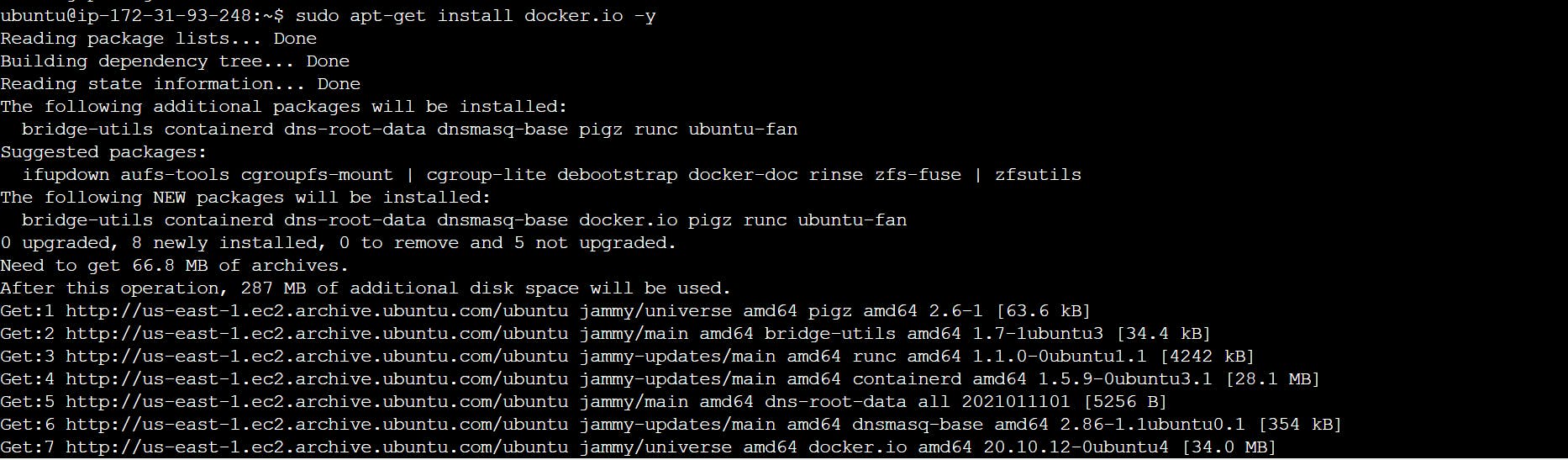

sudo apt-get update

sudo apt-get install docker.io -y

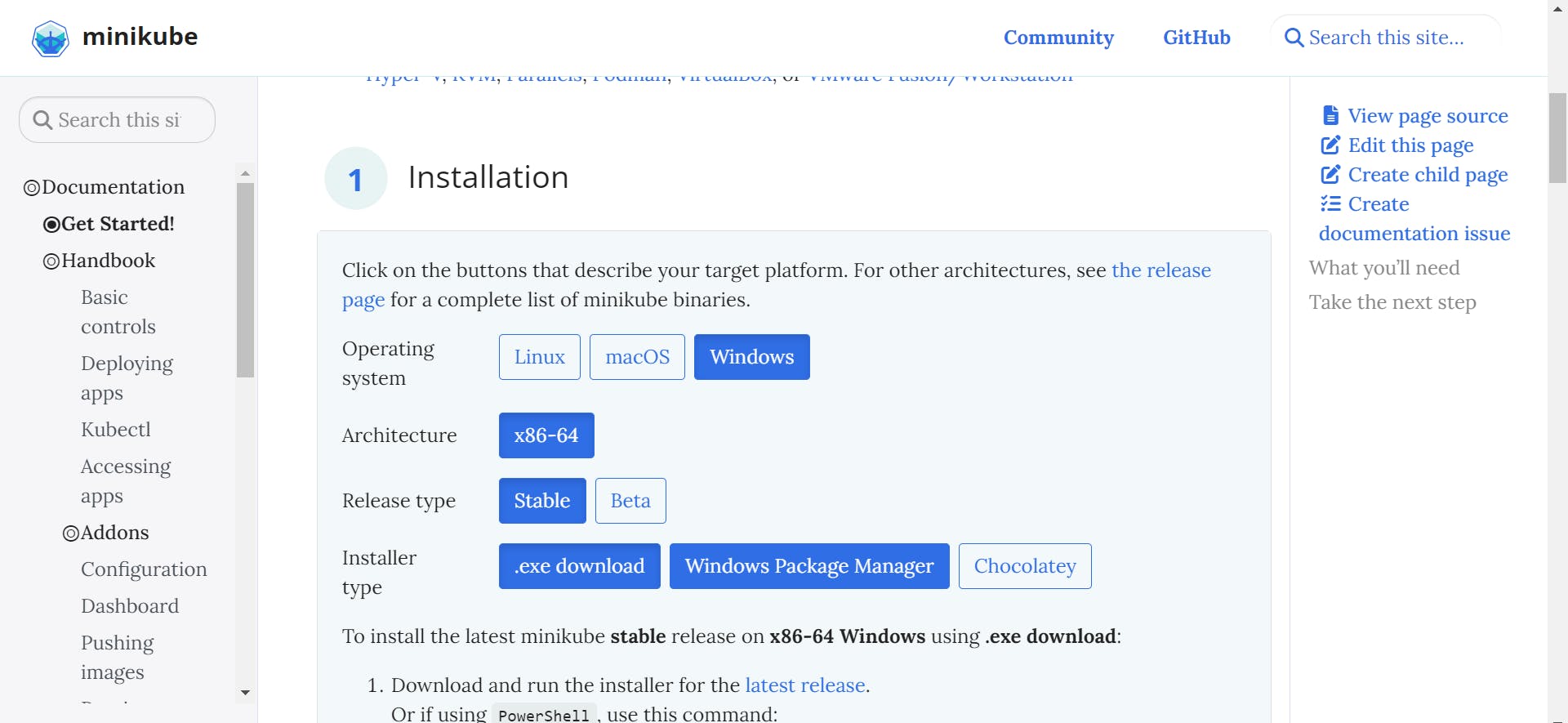

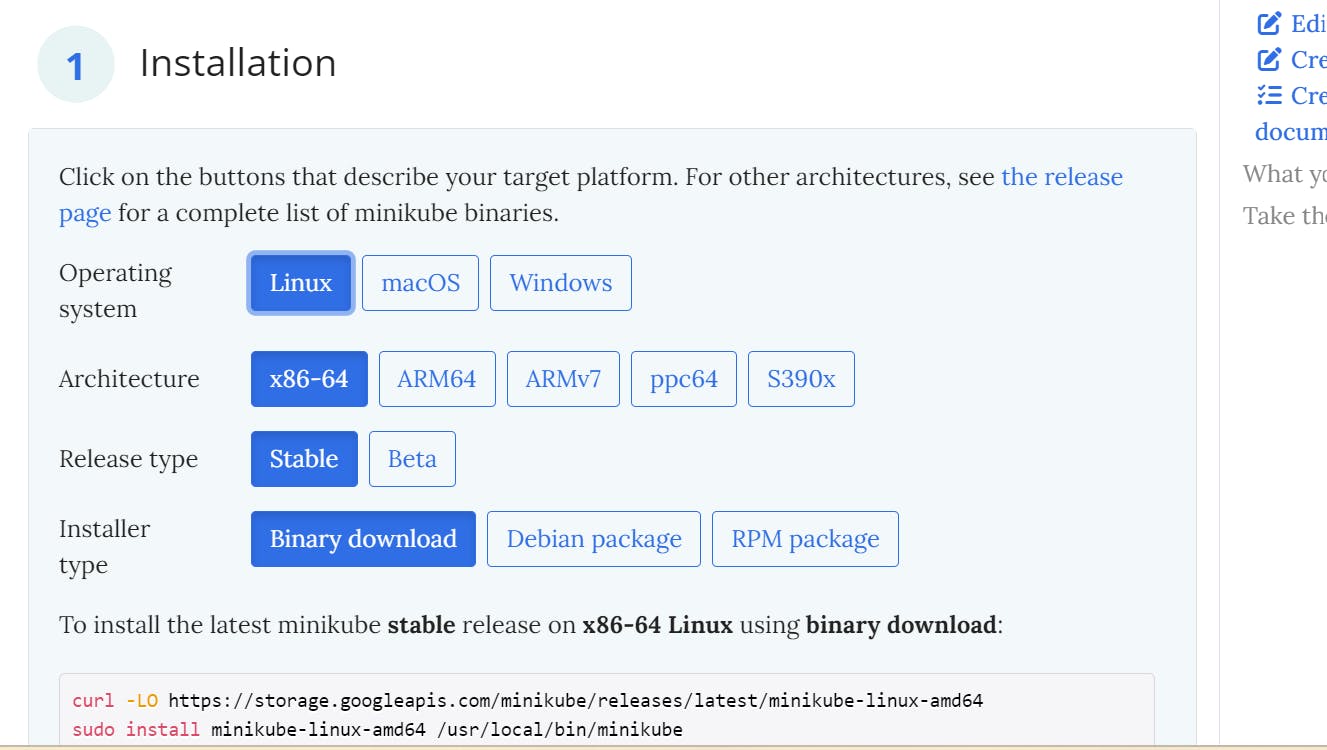

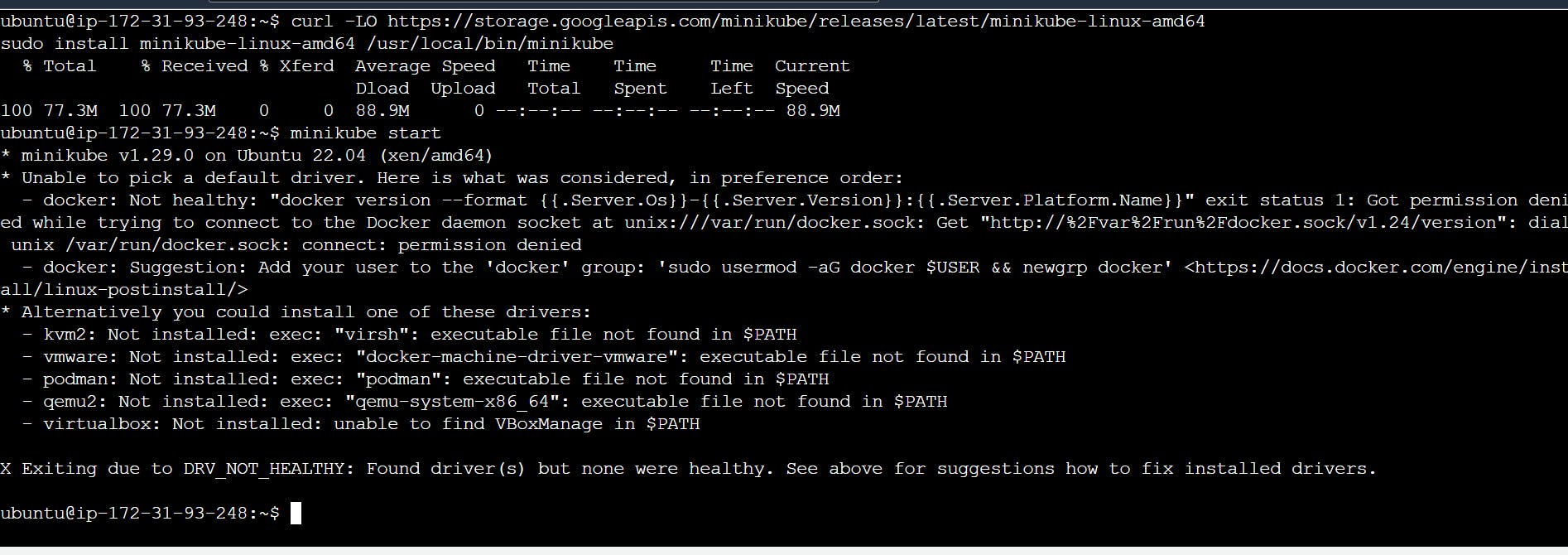

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 sudo install minikube-linux-amd64 /usr/local/bin/minikube

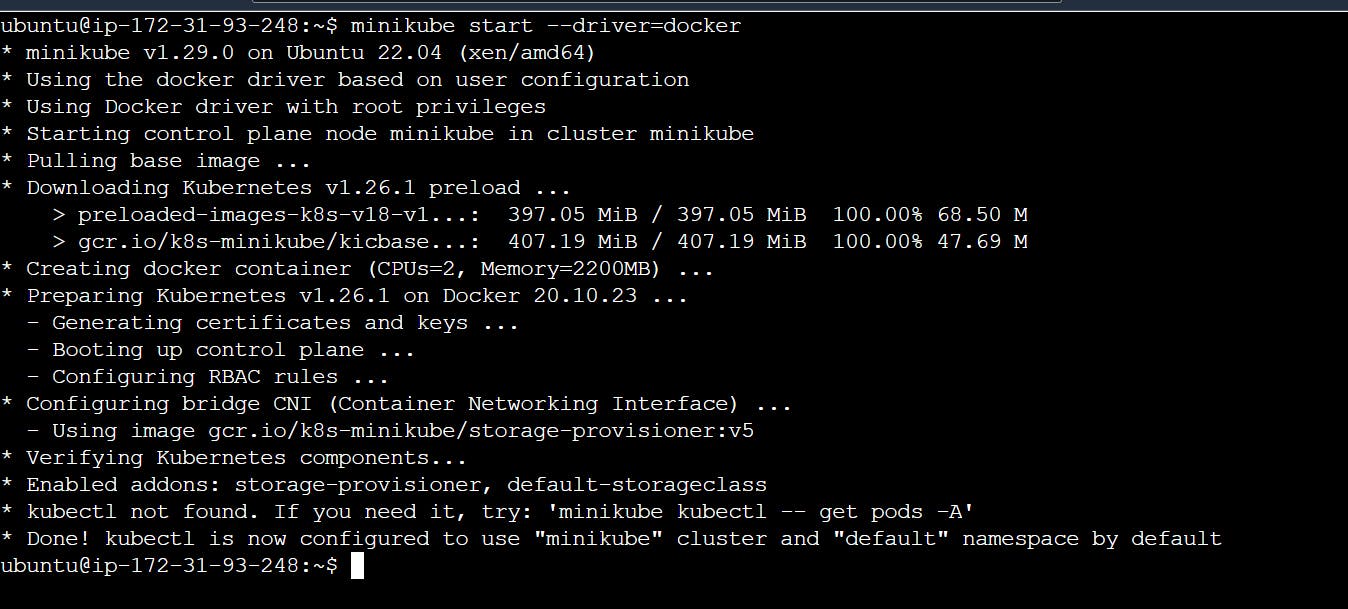

minikube start

and when this type of error is coming then don't worry

use this👇👇👇

minikube start --driver=docker

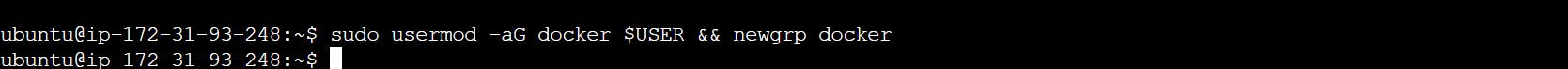

sudo usermod -aG docker $USER && newgrp docker

minikube start --driver=docker

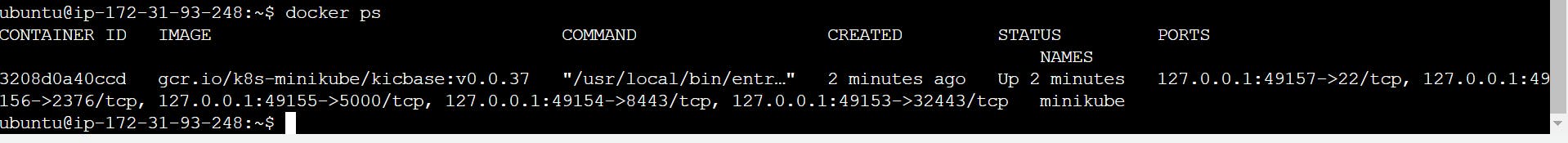

docker ps

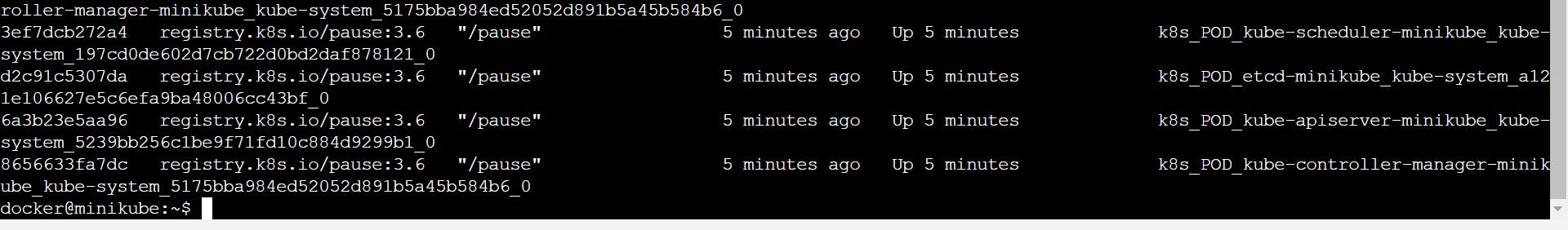

minikube ssh (inside the minikube)

docker ps

exit

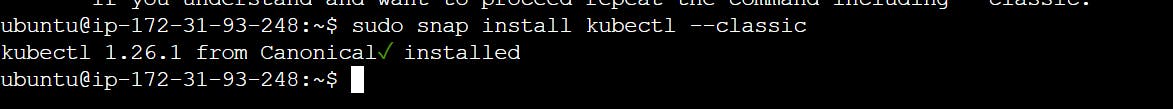

sudo snap install kubectl --classic

What is the difference between sudo snap install, sudo apt-get install and sudo apt install

sudo snap install: - snap is a tool to install contanarized application

what is the containerized application?

Docker Container VMware container etc...

sudo apt-get install:-Anything that is kept something somewhere on the internet so you use apt-get.

**sudo apt install:-**Install what is stored in your system.

kubectl get pod

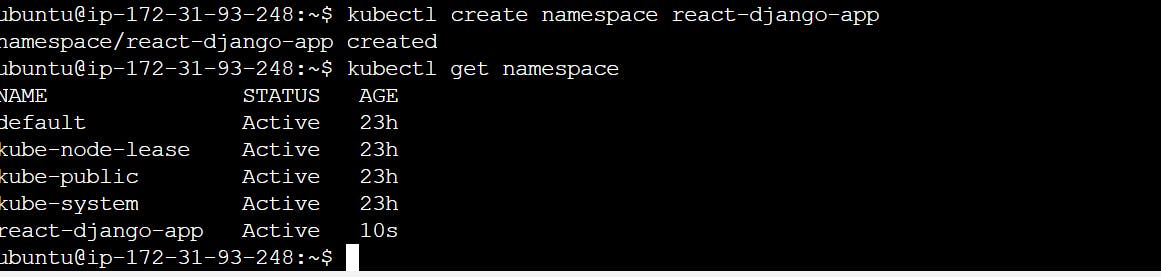

What is a default namespace? How many types of Namespaces are their

Namespace is like a label that is made to identify its pod

eg: suppose 3-4 pods are running inside your node and one of them pod is of Django app one is of java app one node is of nodejs

So among these, I want a pod with Django, so to clarify this thing, we create a namespace. There is a name to identify you, in the same way, we use a namespace to identify pods.

Types of Namespaces

default namespace: I have created an application without a namespace so it is called a default namespace.

kube-system (used for Kubernetes components): The namespace for objects created by the Kubernetes system. where your system pods reside

kube-public (used for public resources): If you want to run a service that is publicly available so that everyone can use it, then put that service inside the kube-public namespace. so everyone can access it.

kube-node-lease: There can be many nodes in a Kubernetes cluster, and each node will have a name, and there is also a namespace for it it is called kube-node-lease

kubectl get pods --namespace=kube-system

How many pods create in a node?

About default maximum Pods per node. By default, GKE allows up to 110 Pods per node on Standard clusters, however, Standard clusters can be configured to allow up to 256 Pods per node. Autopilot clusters have a maximum of 32 Pods per node.

What is a Service Proxy/kube proxy?

The service proxy is a feature of Kubernetes that enables the communication between different services and applications running on the same cluster. It works by routing requests from one service to another, allowing different components of an application to communicate with each other.

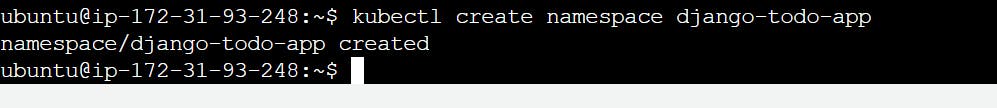

How to create a namespace

kubectl get namespaces

kubectl create namespace django-todo-app

kubectl get namespaces

How to delete namespaces

kubectl delete namespace [your-namespace]

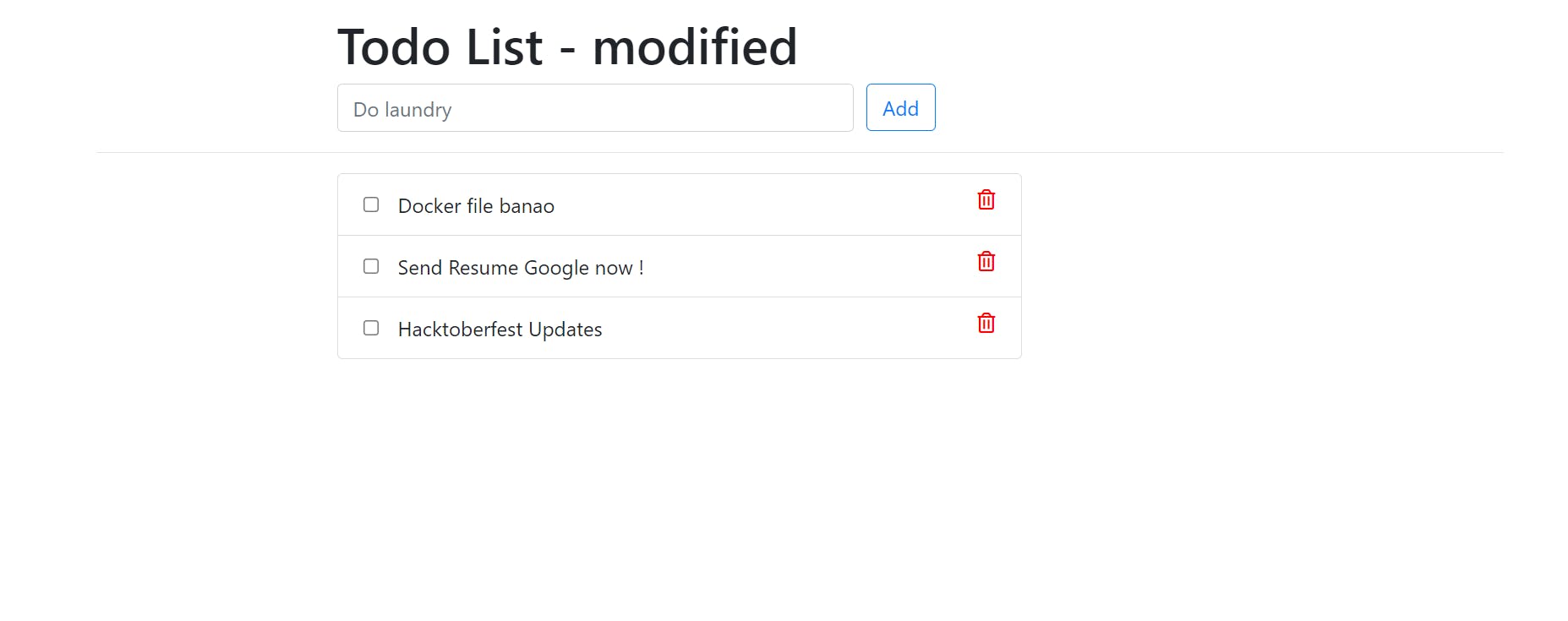

project -1

kubectl create namespace react-django-app

your image is already pushed in the docker hub(If you do not know how to push images in Docker Hub, then read my Docker articles, and you will understand.)

mkdir kubernetes-project

Create a pod.yaml file

kubectl apply -f pod.yaml

kubectl get pods --namespace=react-django-app

minikube ssh

docker ps

exit

kubectl get pods --namespace=react-django-app -o wide

-o wide = By doing this you also get the IP address

How we created multiple pods in yaml

vim pod2.yaml

when you get error like this

This error is coming because you cannot update the running container if you create a pod, you can do it from deployment but not in this

solution: you create a new pod file and then do it 👍

How to delete your pod

kubectl delete -f pod.yaml

kubectl apply -f pod2.yaml

kubectl get pods --namespace=react-django-app

minikube ssh

docker ps

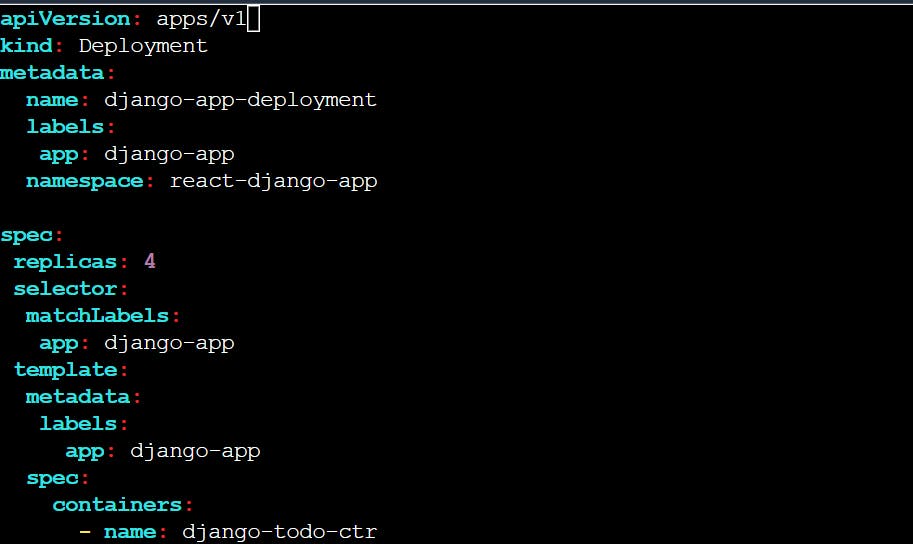

How to Deploy a Project

why do you make two containers out of one pod?

because one is my main container and the other is a supporting(database, proxy) container.

why do we use deployment?

Deployment is used to manage the deployment of applications on Kubernetes clusters. It allows for easier updates and rollbacks, as well as scaling up or down the number of replicas of an application.

We use deployment because if the pod dies, it does not heal pod is also a container.

If I want the pod to be healed if it dies, then we will have to deploy it.

what is deployment?

Deployment is the process of deploying an application to a Kubernetes cluster. It involves creating and configuring the resources necessary to run the application, such as pods, services, and ingresses. The deployment also handles rolling updates, rollbacks, and scaling up or down the number of replicas of an application.

There can be many pods for one application but there always will be one deployment file for one application.

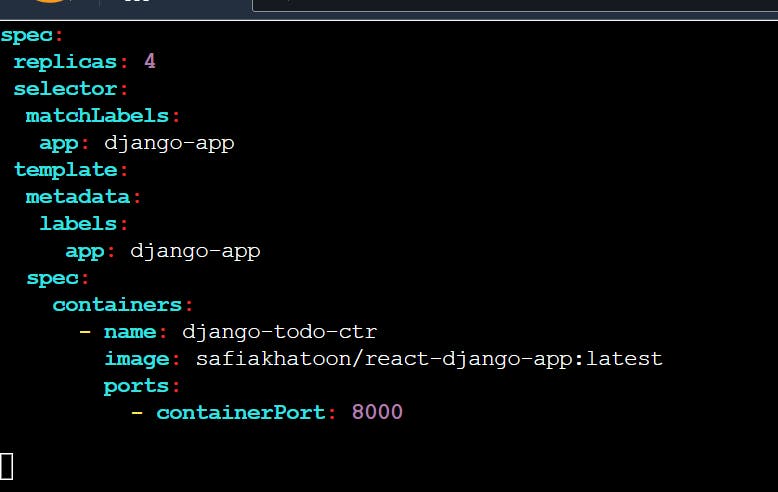

Deployment Kubernetes

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

If you do deployment then you can also do auto scaling and auto healing depending on your instance it will not happen without deployment

what are labels?

Labels are key-value pairs that are attached to pods, replication controllers and services. They are used as identifying attributes for objects such as pods and replication controllers. They can be added to an object at creation time and can be added or modified at the run time.

what are selectors?

Labels do not provide uniqueness. In general, we can say many objects can carry the same labels. Label selectors are core grouping primitives in Kubernetes. They are used by the users to select a set of objects.

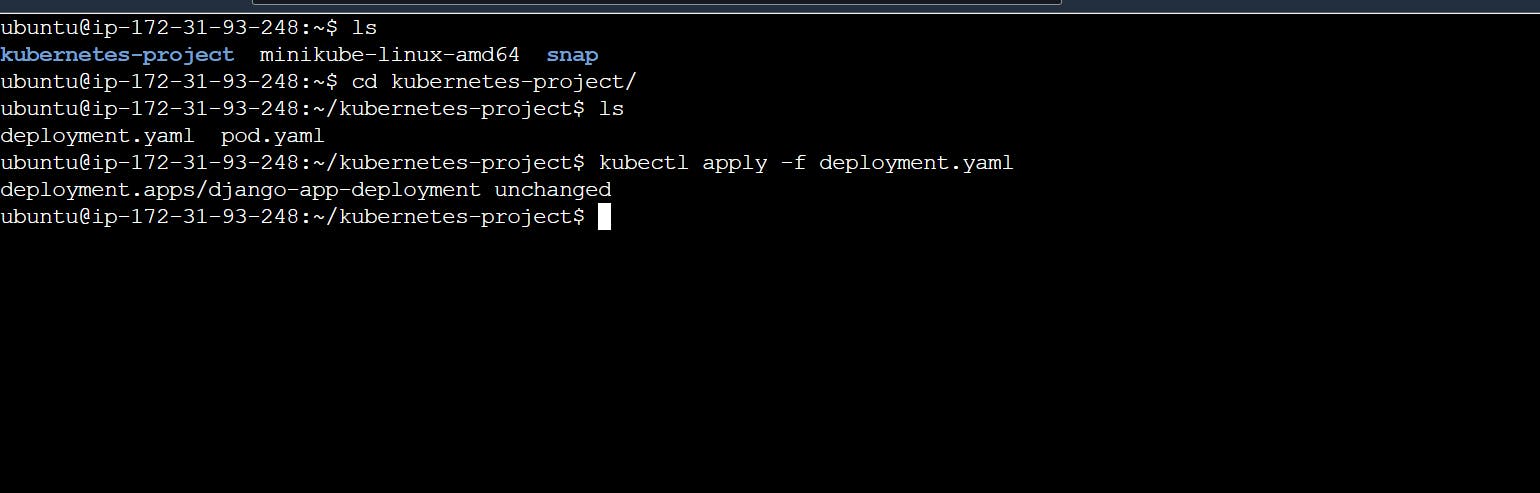

step: 1

create a deployment file

kubectl apply -f deployment.yaml

kubectl get pods --namespace=react-django-app

How to delete a pod

kubectl delete -f deployment.yaml

kubectl get pods -n react-django=app

how to delete a one pod

kubectl delete pods django-app-deployment-8564d6c7d9-7pcvh -n=react-django-app

-n - short form of namespace

How will you ensure 0 downtimes?

Zero downtime can be achieved by using Kubernetes features such as rolling updates, fault tolerance, and high availability. Rolling updates allow for a controlled deployment of new versions of an application, while fault tolerance allows for the application to remain running even if a node fails. High availability ensures that the application is always available to users.

Why do we not use deployment

because Ip is not constant deployment replication is not constant.

That's why we use the service

what is service?

A service in Kubernetes is a resource that provides access to a set of pods. It acts as an abstraction layer between the pods and the user, allowing the user to access the pods without having to know the details of the underlying infrastructure. Services also provide load balancing and health-checking features, making it easier to manage the underlying pods.

A service in Kubernetes is a logical set of pods, which works together. With the help of services, users can easily manage load-balancing configurations.

Types of services:

cluster IP:

Cluster IP is a Kubernetes service type that provides a stable IP address for the service. This IP address is used by clients to send requests to the service and is accessible only within the cluster. Cluster IP services are typically used for internal services that are not accessible from outside the cluster.

load balancer:

A load balancer is a device that acts as a reverse proxy and distributes network or application traffic across several servers. Load balancers are used to improve application performance by decreasing the load on servers and improving availability. In Kubernetes, a load balancer is created when a service of type LoadBalancer is created. The load balancer will then route traffic to the correct pods.

node port:

NodePort is a type of service in Kubernetes that allows external traffic to access services running on the cluster through a specific port on each node of the cluster. NodePort is the simplest way to get external traffic directly to your service.

external name:

ExternalName is a type of alias that can be used to access a service without needing to know its IP address or port.

Let's Do Make a Service

vim service.yaml

kubectl get svc -n react-django-app

where,

svc - service

-n = namespace

this ip is k8s cluster ip not my ubuntu ip

minikube service django-todo-service -n react-django-app --url

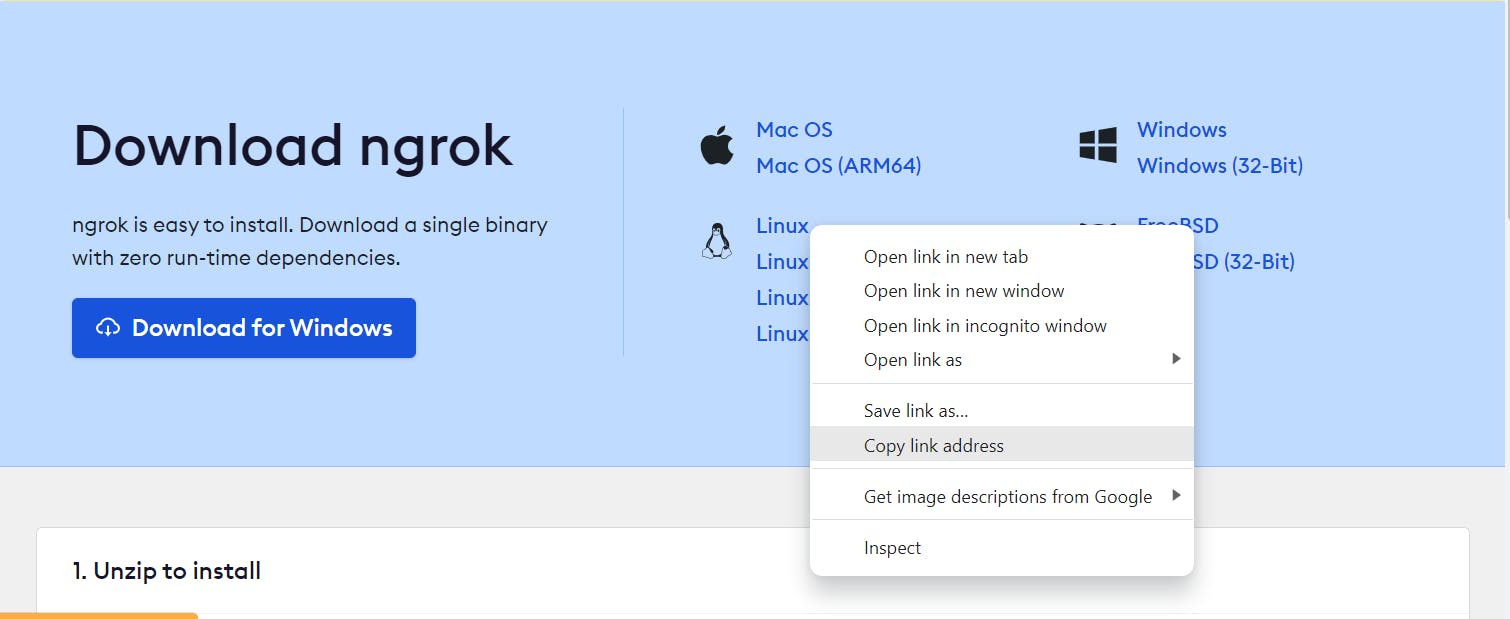

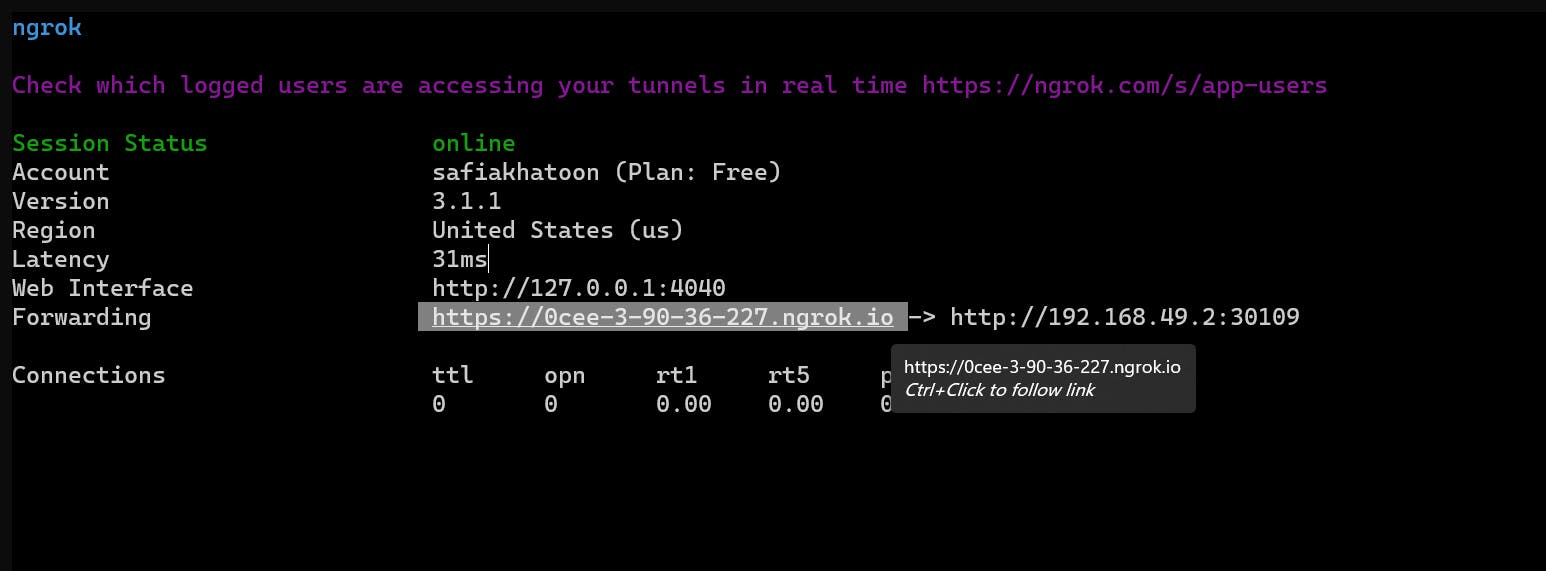

If you want to access your app on the browser so there is a concept called NGROK

LOCAL ---------- TUNEL(ngrok)---------GLOBAL

what is ngrok?

Ngrok is a tool that allows developers to securely tunnel traffic to their localhost. It creates a secure public URL that can be used to access a web application running on the local development machine. It is useful for testing and debugging applications in the cloud.

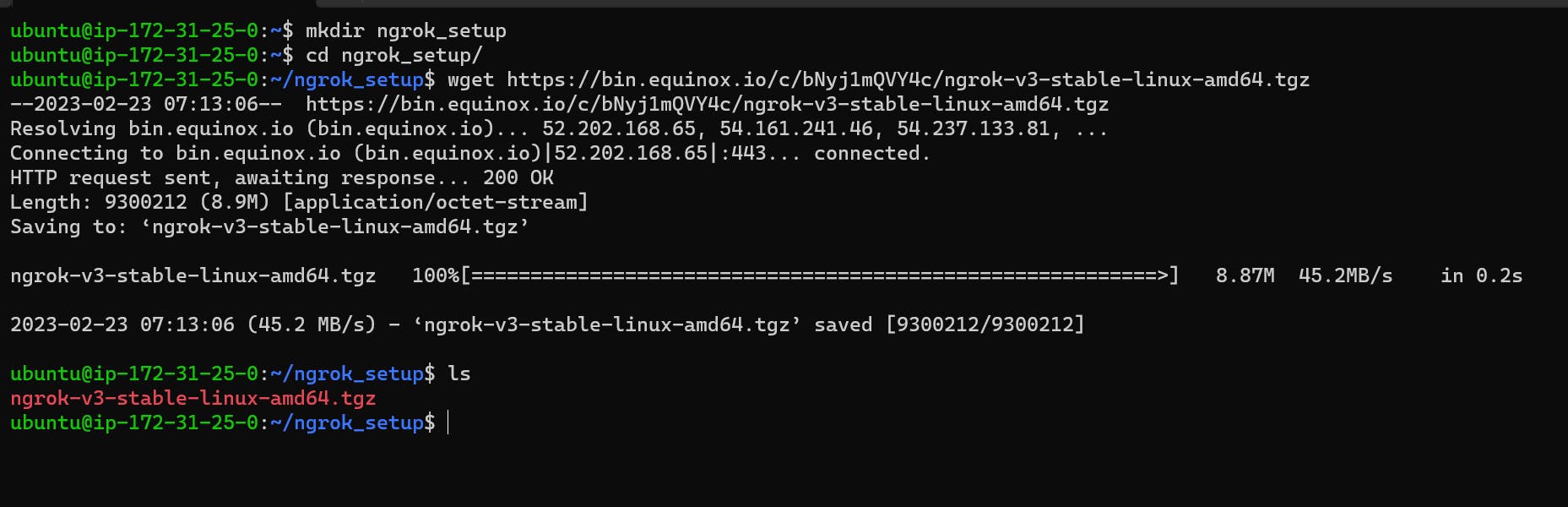

Testing Environment Deployment

click on sign up for free

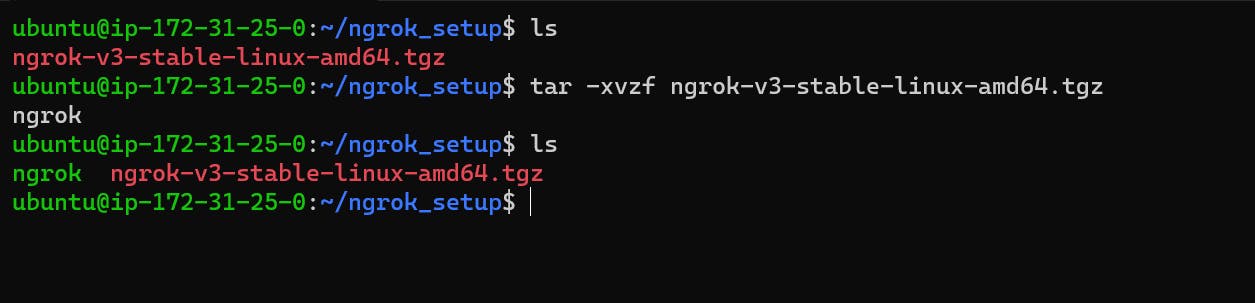

wget https://bin.equinox.io/c/bNyj1mQVY4c/ngrok-v3-stable-linux-amd64.tgz

tar -xvzf ngrok-v3-stable-linux-amd64.tgz

x - for extract

v- for verbose

z- for gunzip

f- for file, should come at last just before the file name.

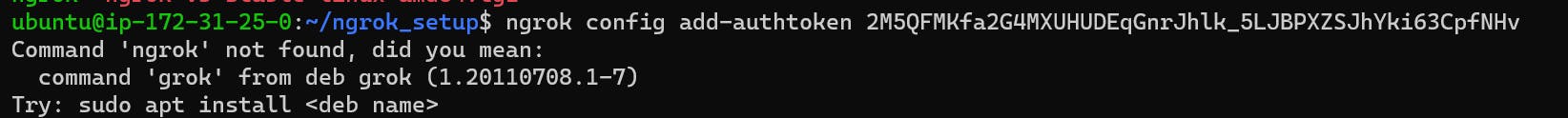

ngrok config add-authtoken 2M5QFMKfa2G4MXUHUDEqGnrJhlk_5LJBPXZSJhYki63CpfNHv

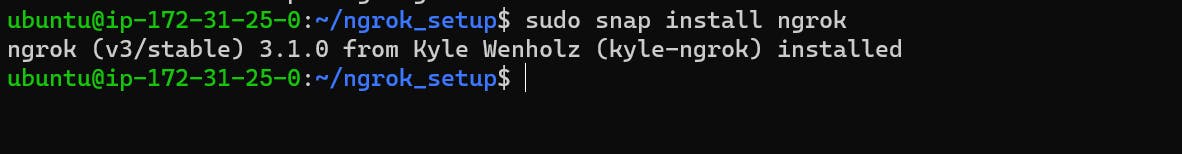

sudo snap install ngrok

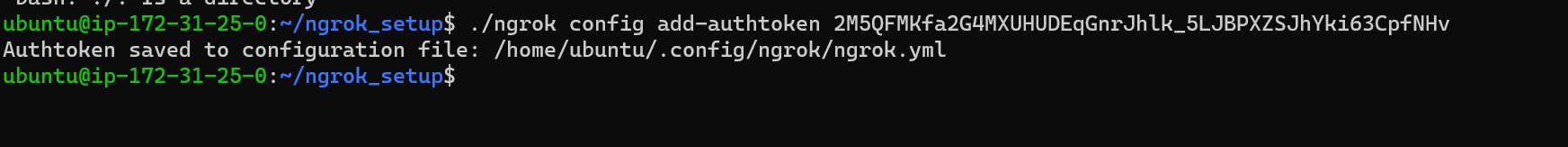

./ngrok config add-authtoken 2M5QFMKfa2G4MXUHUDEqGnrJhlk_5LJBPXZSJhYki63CpfNHv

./ngrok http 192.168.49.2:30109

What is Ingress?

Ingress is a way of allowing external traffic to access services running in a Kubernetes cluster. It is responsible for routing traffic to the correct services based on the URL, hostname, and/or IP address. It is used to provide access control and authentication for services running in the cluster.

In Kubernetes, an Ingress is an object that allows access to your Kubernetes services from outside the Kubernetes cluster.

vim ingress.yaml

kubectl apply -f ingress.yaml

sudo vim /etc/hosts

Imporatnt Topic in k8s

what is Kubernetes networking?

Kubernetes networking refers to how different components of a Kubernetes cluster communicate with each other over a network. It involves assigning unique IP addresses to each pod and service and setting up rules for how traffic is routed between them.

What is the Ingress network, and how does it work?

The Ingress network is a virtual network that provides a secure connection between external users and the services running inside a Kubernetes cluster. It is responsible for routing requests to the correct services based on the URL, hostname, and/or IP address. It also provides access control and authentication for the services running in the cluster.

What are Secrets?

Secrets in Kubernetes are objects that store sensitive information, such as passwords, API keys, and certificates. They are used to securely store and manage sensitive data that is needed by applications and services running in the cluster.

HANDS-ON:

create a two-file username.txt & password.txt

echo "root" > username.txt; echo "password" > password.txt

ls

kubectl create secret generic mysecret --from-file=username.txt --from-file=password.txt

kubectl get secret

kubectl describe secret mysecret

vi deploysecret.yaml

kubectl apply -f deploysecret.yaml

kubectl get pods

kubectl exec myvolsecret -it -- /bin/bash

cd /tmp

ls

cat password.txt

cat username.txt

exit

what is ConfigMaps?

ConfigMaps are objects in Kubernetes that store configuration data in key-value pairs. They are used to store non-sensitive configuration data that is needed by applications and services running in the cluster.

HANDS-ON:(Using Volume)

create a configuration file using vim sample.conf

my map - object name

(you can write any name according to you)

kubectl create configmap mymap --from-file=sample.conf

kubectl get configmap

kubectl describe configmap mymap

vim deployconfig.yaml

kubectl apply -f deployconfig.yaml

kubectl get pods

kubectl exec myvolconfig -it -- /bin/bash

cd tmp

ls

cd config/

exit

\=============================================================

HANDS-ON:(Using env)

vim deployment.yml

kubectl apply -f deployment.yml

kubectl get pod

kubectl exec myenvconfig -it -- /bin/bash

env

echo $MYENV

exit

\=============================================================

When to use kubectl apply and when to use kubectl create?

Kubectl apply is used when you need to apply a configuration to an existing resource, while kubectl create is used when you need to create a new resource.

what is the difference between a config map as a volume and a config map as a variable?

a ConfigMap as a volume is used to store configuration files such as application configuration files, while a ConfigMap as a variable is used to store environment variables.

Difference between config map and secrets?

The main difference between ConfigMaps and Secrets in Kubernetes is that ConfigMaps are used to store non-sensitive configuration data, while Secrets are used to store sensitive data such as passwords, API keys, and other confidential information. Here are some key differences between ConfigMaps and Secrets:- ConfigMaps are stored in plain text, while Secrets are stored in an encrypted format. - ConfigMaps are accessible to anyone with access to the Kubernetes cluster, while Secrets are only accessible to authorized users. - ConfigMaps are typically used for storing configuration data that is not sensitive, such as environment variables, while Secrets are used for storing sensitive data that should not be exposed, such as database passwords or API keys. In summary, ConfigMaps are used for storing non-sensitive configuration data, while Secrets are used for storing sensitive data that needs to be kept secure.

Thank you for reading this blog. Hope it helps.

— Safia Khatoon

Happy Learning :)